As you all know, Pivoting and Unpivoting are supported with SSIS but the implementation of PIVOTing is bit difficult when compared with UNPIVOTing. This post explains the implementation of both PIVOT and UNPIVOT transformations that come with SSIS with all possible explanations.

Pivot allows us to convert normalized dataset into less normalized format, transferring (pivoting) row values into columns. In simple term, it identifies distinct values in a designated column and makes columns based on identified values (note that it is not fully dynamic and automated). Here is an example.

Example shows how PIVOT works, you can see that values (months) in OrderMonth column have been converted into columns, combining with OrderAmount column. Now let’s see how we can do this with SSIS. The code below is the source for pivoting that returns Orders placed by customers for months and years.

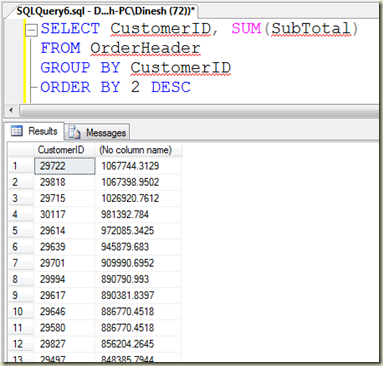

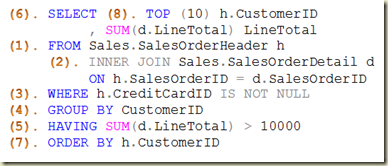

1: SELECT

2: YEAR(OrderDate) OrderYear

3: , p.LastName Customer

4: , DATENAME(mm, OrderDate) OrderMonth

5: , SUM(SubTotal) OrderAmount

6: FROM Sales.SalesOrderHeader h

7: INNER JOIN Sales.Customer c

8: ON c.CustomerID = h.CustomerID

9: INNER JOIN Person.Person p

10: ON p.BusinessEntityID = c.PersonID

11: GROUP BY

12: YEAR(OrderDate)

13: ,p.LastName

14: ,DATENAME(mm, OrderDate)

15: ORDER BY 1, 2

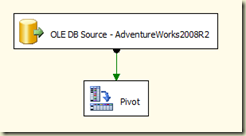

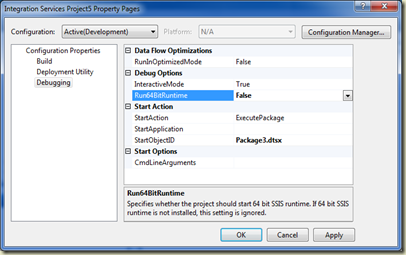

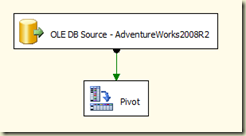

In order to do this in SSIS, create a new project and have a Data Flow Task in Control Flow. Place an OLE DB Data Source in Data Flow Task and set the above query, making the connection to AdventureWorks2008R2 database. Then, drag PIVOT transformation on to Data Flow and connect them using Connection Line.

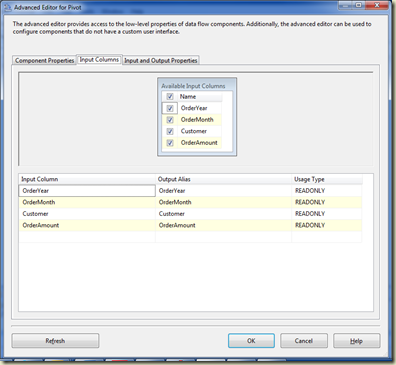

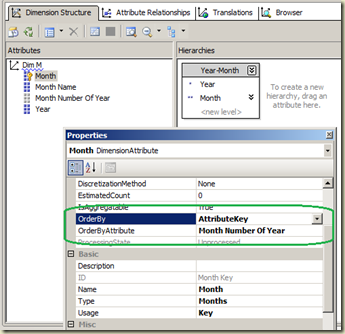

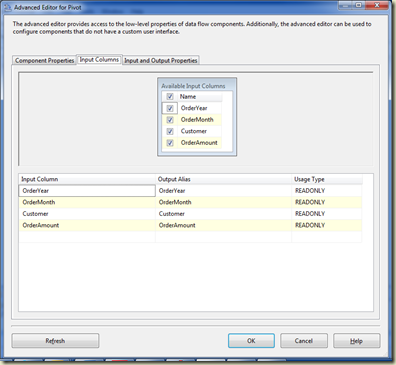

Pivot configuration is little bit tricky, not as easy as T-SQL PIVOT. When you open the Editor of it, you get three tabs. Second tab allows you to marks columns for pivoting, selecting them from input flow.

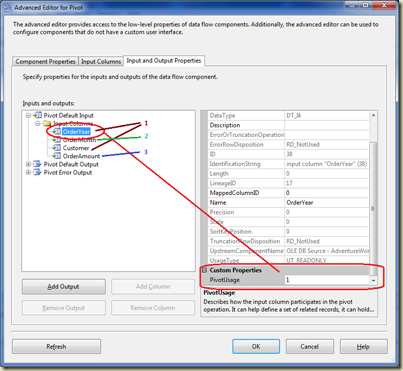

Next tab which is Input and Output Properties, is for instructing SSIS the way of using input columns for pivot operation. The Pivot Default Input node in left pane allows us to set the usage of input columns for pivoting. The Pivot Default Output allows us to form the output columns based on input columns and their usage. Let’s first have a look on Pivot Default Input node.

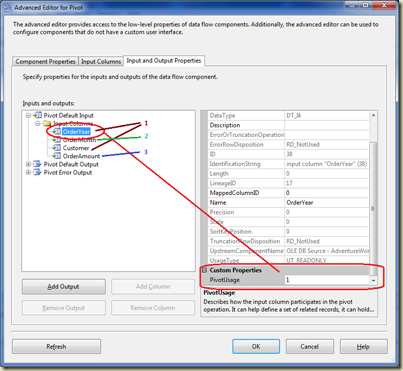

If you expand the next level of Pivot Default Input, which is Input Columns, you can see all columns available for the operation. Selecting one column loads its properties to right pane, which contains a property called PivotUsage that accepts a numeric value from 0 to 3. Here is the way of deciding the value for PivotUsage property.

| Value |

Meaning |

| 0 |

Column does not participate in PIVOTing, it is passed through unchanged to the output. If PIVOTing identifies multiple values in an output row, only the first value of them will be used with the output row.

In our example, we do not have any column to be marked as 0. |

| 1 |

Column is the key (Set key) for generating the pivot output rows. Values in this column will be used to identify unique values for rows. We can mark one or more columns as 1 but need to make sure that combination of them make record unique without providing any duplicates.

In our example, we have to column to mark as 1: OrderYear and Customer. |

| 2 |

Values in this column (Pivot Column) will be used for creating columns in the pivot output.

In our example, OrderMonth is the column which should be marked as 2. |

| 3 |

Values in this column will be used to generate the values for columns created from pivot column.

In our example, OrderAmount should be set as 3. |

Here is the way of setting it;

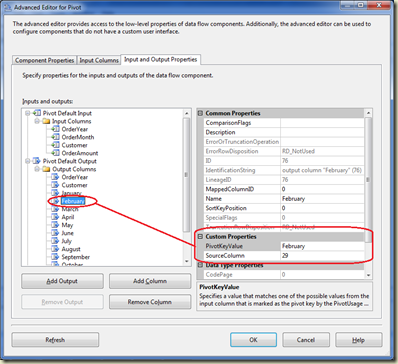

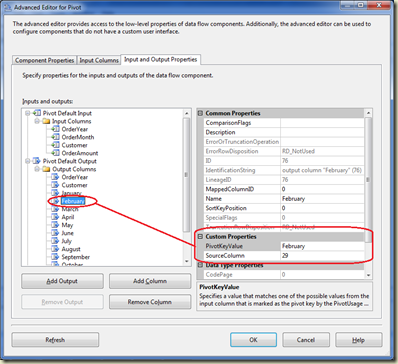

Next step is configuring Pivot Default Output. If you expand the node, Output Columns node is appeared. In order to defines columns, including columns created through pivot column, we have to create them. Make sure you have selected Output Columns node and click on Add Column for creating 14 columns. Once the columns are created, name them with Name property as OrderYear, Customer, January, February, March, April, May, June, July, August, September, October, November, and December.

Next is, linking output columns with input columns. This will be done through a property called SourceColumn in output columns. The value of the SourceColumn has to be taken from relevant input column’s property called LineageID. Find the LineageID of OrderYear input column (in my case it is 17, see above image) and set it in SourceColumn of OrderYear output column. Do the same for Customer column too.

Next columns are month columns. The property SourceColumn of all month columns has to be set with LineageID of OrderAmount. In addition to that, a property called PivotKeyValue should be filled too. This property should be filled with values coming from PivotColumn. For example, January column’s property should be filled with “January”, and February column’s property should be filled with “February”.

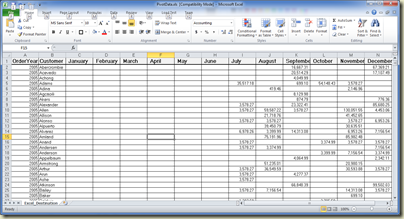

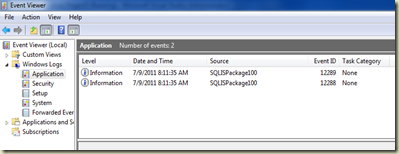

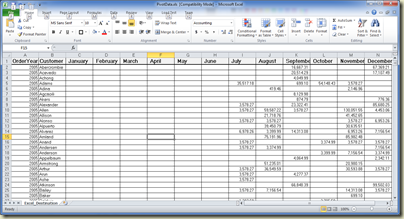

Package is done. Let’s send the output to an Excel file for testing purposes. Here is the package execution and the output of it.

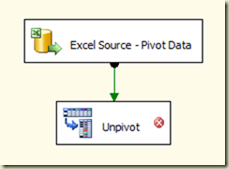

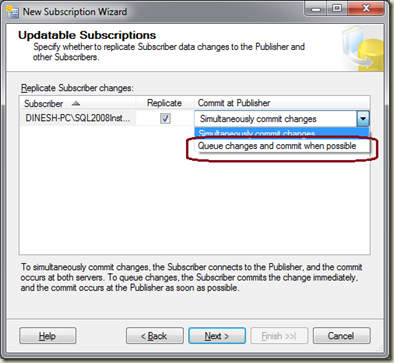

SSIS UNPIVOT is not as tricky/difficult as PIVOT. Let’s see how UNPIVOTing can be done with SSIS. Easiest way to understand this is, reversing the process, unpivoting Excel sheet created with previous example. Let’s have a Data Flow Task and Excel Source on it, connecting to the Excel we created. Add an Unpivot transformation and connect it with the source.

Let’s configure UNPIVOT transformation. Open the Unpivot Transformation Editor and configure it as follows;

-

Select Pass Through checkbox only for OrderYear and Customer.

-

Select all month columns. This loads all months into the grid.

-

Give a destination column name (Eg. OrderAmount) for month columns. Make sure that same name is set for all columns. This column will hold values related to months.

-

Make sure Pivot Key Value has been properly set. This value will be set as a row value for column which will be unpivoted.

-

Finally, give a name for Pivot Key Value column (OrderMonth).

Done. Have a destination as you want and see the result.